Interfaze Beta v1

copy markdown

We are introducing Interfaze-beta, the best AI trained for developer tasks, achieving outstanding results on reliability, consistency, and structured output. It outperforms SOTA models in tasks like OCR, web scraping, web search, coding, classification, and more.

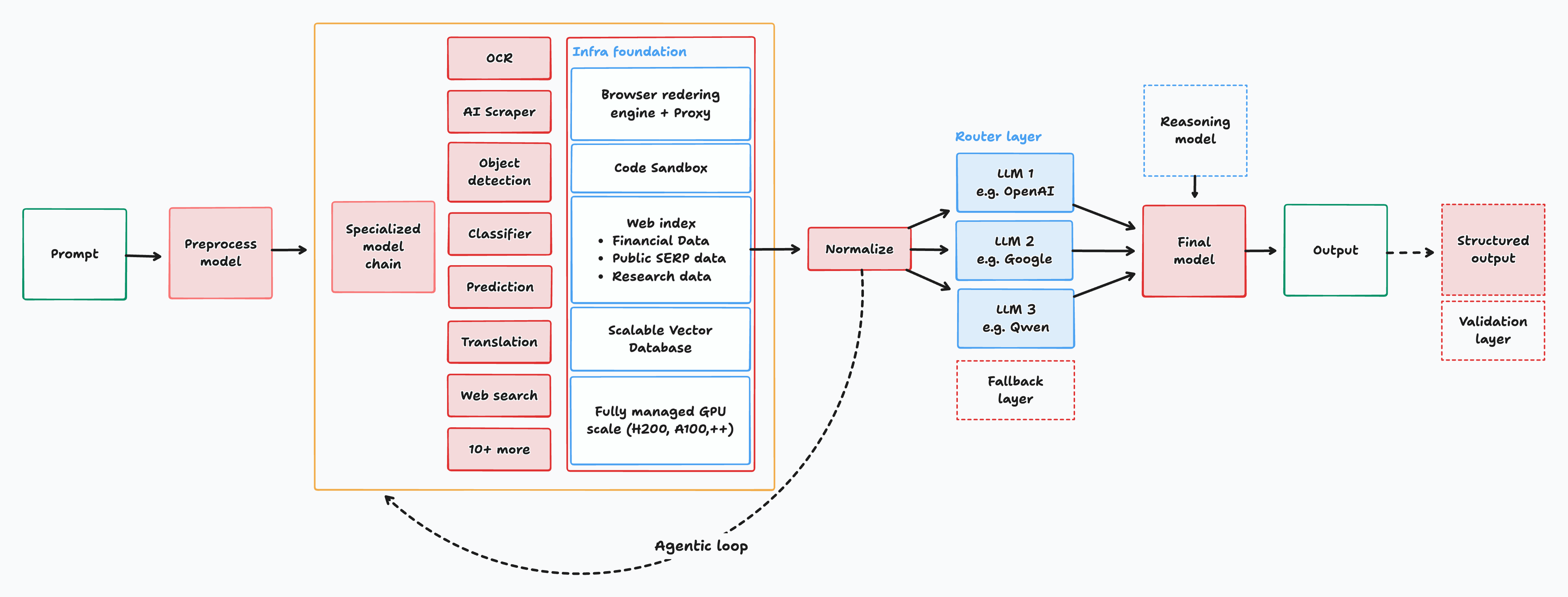

It is a unified system based on the MoE architecture that routes to a suite of small models trained for specific tasks on custom infrastructure, giving the right amount of context and control to carry out it's tasks as effectively as possible.

Overview

- Router first: A custom-trained router that predicts task, difficulty, and uncertainty based on user input.

- Small Language Models (SLMs) for OCR, ASR, object detection, and zero-shot classifiers. (Powered by JigsawStack)

- Tools: a headless browser + proxy scraper, web search, a secure code sandbox, and retrieval.

- Selective escalation, if the small path looks shaky (low confidence, wrong modality, context too long), we hop to a stronger generalist.

- The power of context engineering, tools were designed for the models and not the other way round. Structured clear output, two way actions, DOM access and high quality context. This keeps answers grounded and reduces hallucinations, saving you the time for abusing the LLM.

Quickstart < 1 Minute

Interfaze is OpenAI chat API compatible, which means it works with every AI SDK out of the box by swapping out the base URL and a few keys.

- Base URL:

https://api.interfaze.ai/v1 - Model:

interfaze-beta - Auth:

<INTERFAZE_API_KEY>get your key here

OpenAI SDK on NodeJS:

OpenAI SDK

Learn more about setting up interfaze with your own configuration & favourite SDK (temperature, structured response, & reasoning) here.

How it works?

The MoE architecture allows for native delegation to expert models based on the task and objective of the input prompt. Each expert model is trained to handle multiple file types, from audio, image, PDF, and even text-based documents like CSV, JSON, and more. The process is then combined with infrastructure tools, like web search or code run, to either validate the output or reduce hallucination. A more powerful reasoning/thinking model is an optional step that could be activated based on the complexity of the task. The final output is then processed to either be confined to a set JSON structure or a pure text output.

Specs

- Input modalities: Text, Images, Audio, File, Video

- Reasoning

- Custom tool calling

- Prompt Safety Guard: Text, Images

- Structured output

- Infra tools: web search/proxy scrape, sandboxed code run, code index (github, docs)

Stats for nerds

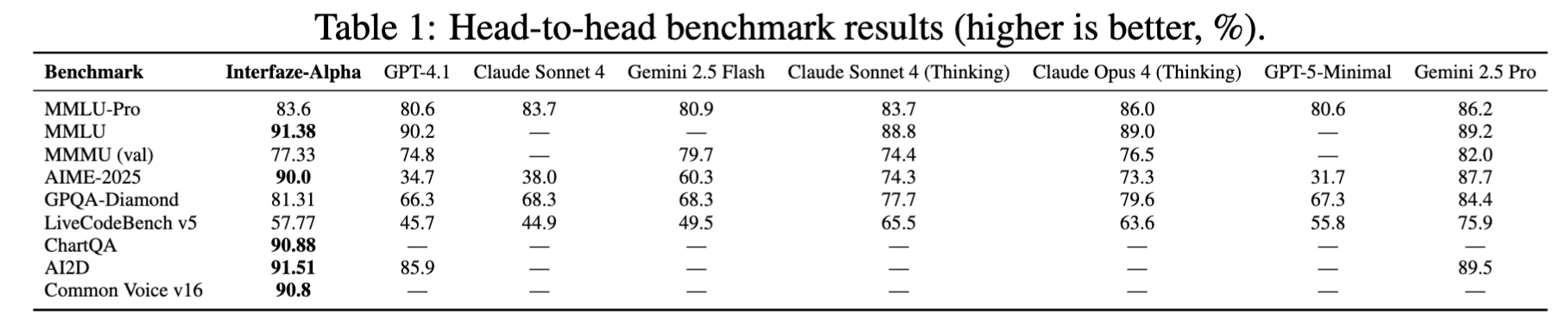

Interfaze performs strongly on directed multi-turn tasks, reasoning, multimodality understanding, and perception-heavy tasks.

Interfaze's goal isn’t to be the most knowledgeable scientific model, but instead to be the best developer-focused model, which means we’re comparing to models that fall in the same bracket as Claude Sonnet 4, GPT4.1, GPT-5 (low reasoning), with a good balance of speed, quality, and cost.

Interfaze scores in tasks involving multimodal inputs are in the top with 90% accuracy (for ChartQA, AI2D) and take the second spot for MMMU, trailing Gemini-2.5-Pro (thinking) by only 5%, while outperforming other candidates like Claude-Sonnet-4-Thinking, Claude-Opus-4-Thinking & GPT 4.1.

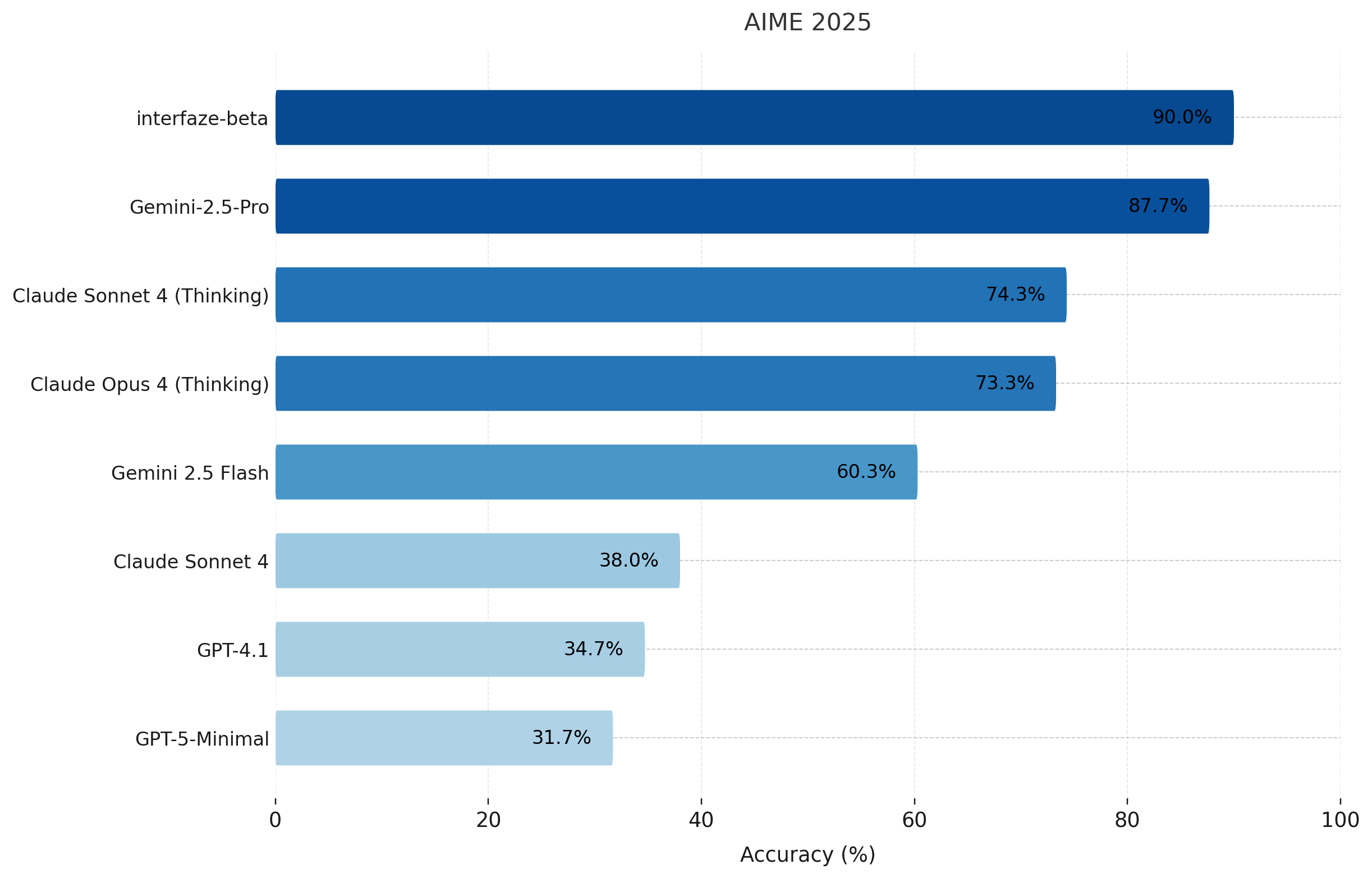

When it comes to math, we top the table with a score of 90% on the American Invitational Mathematics Examination 2025 (AIME 2025). We outperform GPT-4.1, GPT-5-Minimal, and the Claude Family, including both thinking and non-thinking variants, while trailing behind Gemini 2.5-Pro by 3% on GPQA-Diamond, which requires PhD-level problem-solving.

For coding (LiveCodeBench v5), our numbers are strong, especially compared to SoTA models (where we outperform GPT-4.1, Claude Sonnet 4, Gemini-2.5-Flash, GPT-5 Minimal).

Taste is the bottleneck, not compute!

Interfaze-Beta outperforms other SoTA LLMs for perception tasks. A few good attempts would be:

You can find the code generated by Interfaze here.

Setup:

Through the following real world use-cases we will be importing the interfaze_client from commons.py :

Extract Candidate Experience in JSON from LinkedIn:

Output:

Determine Sentiment for Your Stock Holding:

Output:

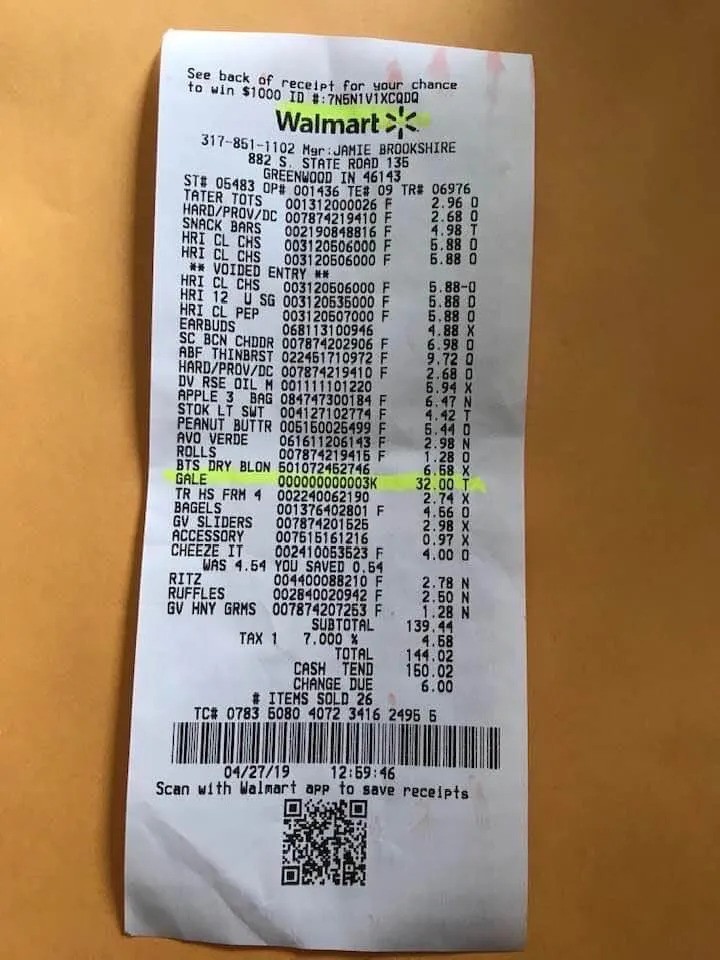

Process Real World documents with Ease:

Consider the following receipt from Walmart

Output:

Determine Speech Sentiment

Output:

“Connecting the dots” by Steve Jobs at Stanford University Commencement 2005.

Verified Code Generation:

Interfaze can verify the complex code within isolated code sandbox, adding a safety check to differentiate between unsafe code, edge-cases and real-world logic.

Consider the following:

Output:

Safety & Guardrails

Interfaze provides configurable content safety guardrails. It allows you to automatically detect and filter potentially harmful or inappropriate content in both text and images, ensuring your applications maintain appropriate content standards.

Consider the following example where we place guardrails to reject requests for:

- S1: Violent Crimes

- S2: Non-Violent Crimes

- S3: Sex-Related Crimes

- S10: Hate

Output:

All guardrails are documented here.

How to get started

- Dashboard: https://interfaze.ai/dashboard

- Quickstart guide: https://interfaze.ai/docs

What’s Next?

- Reducing the transactional token count

- Pre-built prompts/schemas optimized for specific tasks

- Built-in observability and logging on the dashboard

- Comprehensive metrics and analytics

- Caching layer

- Reducing latency and improving throughput

- Custom SDKs for interfacing with AI SDK, Langchain, etc.

- Vector Memory layer

- Project leaderboard

We're continuously improving Interfaze based on your feedback, and you can help shape the future of LLMs for developers. If you have any feedback, please reach out, yoeven@jigsawstack.com, or join the Discord.